Once in a while you or your organization gets an email to the effect of:

“I am an independent website security researcher, I’ve identified a bug on your website, here’s my evidence, please pay me for my responsible disclosure, thank you, I am nice.”

Reasonably, you toss it in the trash where it belongs. Then you get repeated requests to pay the claimed bug bounty which you never offered.

It’s spam, of course—potted meat of the common variety—but something about the message suggests plausibility, and in fact if you follow up on some of the evidence, you might discover that the findings are valid. Technically, at least. On the surface. So are the warnings legitimate, as well? Is your website security compromised?

Is Your Website Security Compromised?

To begin with, the alleged vulnerability usually isn’t, and its plausibility depends on a scary interpretation of a normal behavior, or a mischaracterization of the methods to demonstrate it. For example, the frequently alerted “x-frame-bypass bug” actually doesn’t expose your site to “clickjacking” at all.

But staying on top of every variant of this kind of thing is a tremendous time-waste, and even though any unsolicited email of this kind should always be summarily deleted, and the sender blocked, the questions percolate. Am I safe? Have I missed something? Why is my cat on my keyboard?

Because that’s just what cats do, of course, but the rest of it bears consideration.

General Website Security Principles

“What could possibly go wrong?” All kinds of things. But there are some general website security principles you can keep in mind, along with the bear spray in your pocket.

Never trust the user

Little Bobby Tables is one of the most famous memes on the internet, at least among developers. It illustrates the classic “SQL injection” attack vector, the defense for which is simply stated as “always sanitize your inputs.”

If you’re running anything other than a static blog, you probably accept user input somewhere. Contact forms, search queries, logins… all take text typed in by the user and act on it, usually involving data processing and retrieval in a database. Even URLs can be crafted to probe for and execute attacks, since they drive the complex program which is your site.

Best website security practice dictates never to take that input on its face value. You strip out HTML, filter against text patterns, enforce strict limitations and reject or ignore anything out of bounds. If you’re expecting an integer, throw anything that’s not. If you’re accepting strings, remove tags. Validate everything.

Another simple example of the SQL injection attack might look like this:

Suppose we have a form on our website that allows us to list information about our own account—or even, if we have adequate permission, any account managed by the site. This form could have a field that accepts a value representing that account’s ID. When the form is submitted, we act on it by querying the database using the input in place of a variable:

String query = "SELECT \* FROM accounts WHERE custID='" + request.getParameter("id") + "'";Now, if the user provides the string UNION SELECT SLEEP(10) in the form, the query becomes:

String query = "SELECT \* FROM accounts WHERE custID='UNION SELECT SLEEP(10)'";

This has the effect of returning every column of information about every customer in the database, which is certainly not what you would intend.

Keep your cards close

Your website isn’t just a display of information. It’s an application and tosses around a lot of data as it loads pages, responds to user activities, and so on. Much of this information is stored in the browser itself and passed from page to page as you navigate and interact with it. Anyone can use browser tools to inspect:

- The HTML source behind the page.

- The Document Object Model (DOM) – the logical structure of the loaded page including “hidden” elements that exist only in storage, as well as “events” implemented by scripts.

- The data in static and dynamic storage…cookies and sessions.

- The many scripts that enable interaction, animation, and so on.

At the very least, this data needs to be syntactically obscure. It should be encoded or encrypted, which, by the way, are not the same thing. The point is to obfuscate as much as possible, what the information is, what it’s used for, what its values are, what patterns might be discerned to successfully fake it. So don’t call that important variable ‘your_user_id’, call it ‘grommish’, and if possible, make its values utter gibberish, as opposed to textually legible or numerically serial.

Also, it should be kept to a useful minimum. Sometimes it’s better to sacrifice a little “responsiveness” for security and let the application take a little extra time to retrieve something from the database, rather than just tossing it into the DOM and letting it propagate.

For a very simple example, don’t keep a user’s information entirely in the browser session or cookie, to be easily inspected:

user_id = 1234 user_first_name: Zaphod user_last_name: Beeblebrox user_session_id = abcd1234 last_query = 'gargle blaster'

Instead, store the simple value

natter = grommish

and when the next page is requested, look up what that actually means in the application database, and proceed accordingly.

Keep the moat high and the portcullis ready

Back in the day, as we like to say, a lot of the web depended on the simplest possible interaction between a browser and a web server—the machinery and software that actually responds to an incoming URL request by returning information. When you navigated to a URL you would be served either the content of index.html, which might have some familiar elements like texts and links and so on, or in the absence of such a file you would actually see the content of the directory itself, a raw listing of files such as images, texts, or even executable programs for download.

Now, of course, you don’t want a visitor seeing all that stuff and directly accessing it. So the web server is configured to specifically permit access to, and execution of, a very few files. Any attempt to directly access anything other than index.php, for example, is either ignored by the server, or directed to a common “talk to the hand” page.

Responsibility for this is borne heavily by the web application itself, which needs to be written—and tested—to anticipate this kind of thing. But a fair portion of it is usually implemented at the outset by the crafting of control file such as .htaccess for Apache servers…instruction sets for the server, telling it what to do with requests that match certain patterns. These instructions can, for example, transform any http request to https, ensuring that you’re always using the security features of that protocol. They can also strictly control what requests are accepted at all, and route them or reject them accordingly.

One section of an .htaccess file might look like this, blocking access to a great many files that we never want a web browser to see:

# Match files by their extensions or names that are common in Drupal and similar applications.

# This includes various configuration files, scripts, SQL files, templates, etc.

<FilesMatch "\.(engine|inc|info|install|make|module|profile|test|po|sh|.*sql|theme|twig|tpl(\.php)?|xtmpl|yml)(~|\.sw[op]|\.bak|\.orig|\.save)?$|^(Entries.*|Repository|Root|Tag|Template|composer\.(json|lock)|web\.config)$|cron\.php|install\.php|^(CHANGELOG|COPYRIGHT|INSTALL.*|LICENSE|MAINTAINERS|README|UPDATE).txt$|^#.*#$|\.php(~|\.sw[op]|\.bak|\.orig|\.save)$">

# If the mod_authz_core module is available, deny access to all users

<IfModule mod_authz_core.c>

Require all denied

</IfModule>

# Fallback for older versions of Apache that do not use mod_authz_core

<IfModule !mod_authz_core.c>

Order allow,deny

</IfModule>

</FilesMatch>

Beware the dancing bananas

Modern websites are flashy. They’re “responsive,” changing layout and even functionality depending on all kinds of contexts from what browser you’re using to whether you’re on a phone, to what you clicked or scrolled just a moment ago. They load up videos, pop up notices, move graphics around as you navigate even a single page, producing a dynamic experience much more complicated than the flat walls of text this all started with.

Most, though not entirely all, of this flash (which in some cases actually used to be Flash) is provided by scripting based on JavaScript, which has proliferated to a gigantic ecosystem of libraries, frameworks, and even full-blown applications. Every “embedded” feature you see on a website is presented by a script that uses your browser as its own programming environment, running actual code, and almost always making requests to other sites doing very complicated work to send back to your browser the interactive content or media you’re (hopefully) enjoying. It’s also responsible, of course, for the operation of the entire online ad industry, and all that entails.

Additionally, script-based services are often deeply nested. They inject into your browser, not just their own container in which to run a function, but calls to other script which create their own containers, and so on to whatever arbitrary depth is wanted.

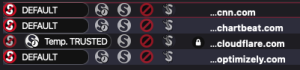

We can illustrate this by taking a look at what happens when you go to CNN’s home page with a browser plugin enabled, called NoScript. If you “lock it down,” permitting almost nothing, NoScript reports very few scripts under control.

If you now unblock scripts originating from the main domain, you can see a flood of new scripts demanding access to implement their own functionality, which is often unclear.

As you can imagine, there’s nothing intrinsically secure about any of this. The simple fact that it’s running in your browser, rather than just showing you the result of a program that’s already been run on a server to show you some text, makes it an immediate risk.

XSS

The classic, and probably most prevalent, category of these risks is Cross-Site Scripting (XSS), of which the following is a somewhat schematic example.

Websites accept two kinds of queries which can pass information to the application, POST and GET. You can see that a site is using a GET request, if clicking on a button or submitting a form generates a URL of the form https://bearsrule.org?species=grizzly. Such a site might pop up a friendly banner informing you that “We love grizzly bears too. Click here to learn more about the grizzly bear.” Such links are often posted on affiliate sites for advertising, or more commonly these days, all over social media.

Now, what if someone posts a link like this (expanded and commented for illustration):

<!-- URL with a vulnerable parameter 'species' which includes JavaScript injection. -->

https://bearsrule.org?species=<script>

(function() {

// Define a helper function 'a' to convert character codes to characters.

var a = String.fromCharCode;

// Array 'b' contains character codes for 'https://somesite.com' URL.

var b = [104, 116, 116, 112, 115, 58, 47, 47, 115, 111, 109, 101, 115, 105, 116, 101, 46, 99, 111, 109];

// Array 'c' contains character codes for the text 'grizzly'.

var c = [103, 114, 105, 122, 122, 108, 121];

// Create a new anchor element and set the URL as the href.

var d = document.createElement(a(97)); // 'a' is the character code for 'a', creating an <a> element.

d.href = b.map(a).join(''); // Convert 'b' array to URL string and set as href.

d.innerHTML = c.map(a).join(''); // Convert 'c' array to string 'grizzly' and set as anchor text.

// Append the newly created anchor element to the document body.

document.body.appendChild(d);

})();

</script>

The casual user isn’t going to inspect the details of that link. They’re going to tell themselves, “this is relevant to my interests,” click it, and get the expected popup. But without proper validation, now the link in the popup might actually go to somesite.com, or even call another piece of script, a “payload,” that does something malicious.

CSP

Fortunately, these risks are well known, and a majority of them can be defended against with a well-crafted Content Security Policy (CSP), which is used by your application to inspect where requests are coming from—especially in the case of external services invoked by scripts running on your own site—what connections they’re trying to create, and access to gain, and block them if they don’t meet specific criteria.

CSP rules appear in the response header of the webpage you’re viewing. They’re not directly viewable when looking at the HTML of the page, using an “inspector” tool, but if you examine the Network area of the same tool you can view see the data that’s exchanged between your browser and the server that does not show up in the page, but that the systems use behind the scenes, as it were, to control how the data is handled.

The headers on the Mozilla CSP page above include the entry content-security-policy, and a simplified list of its values look like this (again, expanded and commented for illustration):

# Define default security policy for all resources if not specified otherwise.

default-src 'self';

# Restrict scripts to trusted sources:

script-src

'self' # Allows scripts hosted on the same origin

https://www.google-analytics.com/analytics.js # Google Analytics for tracking

https://www.googletagmanager.com/gtag/js # Google Tag Manager for managing tags

https://js.stripe.com # Stripe for payment processing scripts

*.gravatar.com # Gravatar for user avatars

mozillausercontent.com; # Mozilla content delivery network

# Restrict media files to trusted sources:

media-src

'self' # Allows media from the same origin

archive.org # Archive.org for historical and public media files

videos.cdn.mozilla.net; # Mozilla CDN for video content

This tells us that the page will run scripts from the Mozilla site itself, from Google Analytics, and from a few other sources. It also tells us that there are only three sites from which the page will permit videos to be embedded in the page. All others will be blocked.

So many bears

Really, the list goes on. All we can do here is give you an overview of the kinds of things you should be aware of, and very brief suggestions of what to do about them.

But you can do better. And in doing so, you can relax and breathe.

Enhance Your Calm

The two truths

Know this, two things are true: Nothing on the web is secure in the strictest, strongest sense. You’re probably fine.

Security scanners

Your first step toward inner peace is the regular use of security scanning tools. These are programs that walk your website, peering at the code that actually gets loaded into the browser, clicking on things, observing behaviors, and comparing what they find to known patterns that suggest a problem. Most of them are paid services such as WebInspect and the adorably named Burp Suite, that act like any external “crawler,” with the exception that they’re usually even more aggressively intrusive…which is exactly what you want.

Other applications such as Zap are programs you can run yourself, either on a dedicated web server of your own, or even as applications in your local development environment, meant to traverse sites not even deployed anywhere.

These programs generate reports that will:

- Identify the specific vulnerability.

- Identify the URLs which exhibit the problem.

- Indicate the severity of the issue.

- Usually, demonstrate the method or request that revealed the problem.

- Often, supply boilerplate text describing the risk in detail and recommendations to correct it.

All of these, and others, use as points of reference the security standards suggested by the Open Worldwide Application Security Project (OWASP), and the Common Vulnerabilities and Exposures (CVE) / Common Weakness Enumeration (CWE) reports provided by The CWE Program and The CVE Program.

OWASP

One of the most important contributions of OWASP to website security is the maintenance of the infamous OWASP Top Ten, a comprehensive set of descriptions and guidelines for the identification and mitigation of security risks. Reviewing and really understanding this list, and implementing their recommendations to the extent possible, will go a long way toward fixing problems before they even happen. They even go so far as to supply demonstration snippets, of how vulnerabilities might be exploited…and you’ll find that are sometimes repeated verbatim in those annoying “bug bounty” emails. You can trash that spam with even more confidence, if you’ve already read the salient points from the authoritative source.

CWE and CVE

Where OWASP provides a list of guidelines, CWE/CVE, maintained by the MITRE Corporation, actively monitor reports of vulnerabilities found in codebases of all kinds, in any available repository, and supplies a historical and continuously updated listing of them in a structured format that can be accessed and referenced by anyone…most particularly, security scanning programs, which will report their findings using CWE/CVE notations and language.

The important difference between the two is that CVEs are enumerated, specific, and concise. They’re the “index” of known problems. CWEs are the actual descriptions of the “weaknesses” that create the conditions for the vulnerabilities, and they are found and described by an open community of developers and researchers. It’s the language of these CWEs that pads the security reports of commercial scanners, providing sometimes lengthy explanations and recommendations.

Development tools

Before your application even gets to a live site, to be found by a security scan and reported to you in big scary PDFs, you can and should do everything you can to clean up and “harden” your code. All of the preceding suggest things to look out for, and there is entire culture of Best Practices that has grown up in developer communities over the years. You should look them up. You should learn them, and make them as much of your development routine as getting that cat off your keyboard. Some of these include:

- Code securely:

Validate your inputs, don’t “hard code” any credentials or other secrets, and even sanitize your outputs. - Approach access control aggressively:

Require authentication. Use multi-factor authentication (MFA) if possible. Follow the principle of “least privilege,” allowing users to see only what they specifically should. - Protect your files:

Don’t output direct file paths. Don’t allow directory access to anything. Apply correct server permissions to file storage. - Familiarize yourself with vulnerabilities and their mitigations:

You don’t have to read every technical paper that gets published every day, but at the very least you should take advantage of resources as the OWASP Cheat Sheet Series. - Keep your software and all its components up to date:

The vast proliferation of web technologies and their intricate web of dependencies means that things are changing fast, and continuously. Don’t use outdated, vulnerable code. - Use security monitoring and logging:

Every major web application can log notices, warning, and errors that meet various criteria and reflect not only coding problems, but potential risks. At the very least you should be inspecting these regularly. - Use encryption where possible:

Stay current on what encryption algorithms offer what level of security, and which have been deprecated. Know the difference between encryption and hashing.

You should also make use of utilities that scan, not your running website, but your code, and can flag issues well in advance. Modern Integrated Development Environments (IDEs) are equipped with a wide variety of code inspection utilities that will show not just syntax errors or formatting issues in whatever programming language you’re using, but possible security problems. Alongside common “sniffers” for PHP, Javascript, and so on, IDEs support the installation of plugins from vendors such as Checkmarx, with whom ZAP is now affiliated. These plugins interpret the structure and syntax of your program, comparing it to large databases of known potential problems.

Out of the Woods

It’s scary. Your business depends on your website these days. This is your public face, your reputation, and your exposure to all the perils of commerce. Attacks come every quarter…from modern amateur thieves, to organized cartels of shadowy information brokers, to random, faceless, and seemingly purposeless exercises in vandalism. It’s natural to be jumpy and reactive.

Arrayed against this is an entire industry of web security, commercial, and non-commercial brain trusts of smart, fast-moving people who provide information and services that you can, must, take advantage of. As a developer, you can learn, and count on the support of a community. As a business owner, you can trust the developer and their agency, and also learn, so that you don’t feel so buffeted by the storm.

Keep the bears at bay and the cat in your lap, no matter what it says.

When it comes to protecting your website from vulnerabilities and staying ahead of cyber threats, you need a partner you can trust. That’s where New Target comes in. With a team of experienced developers and security experts, we ensure that your site is always safe, secure, and running at its best. We stay ahead of the latest security trends, handle the complexities of website protection, and take the worry off your shoulders. You don’t have to deal with the relentless bug bounty spam or panic about potential vulnerabilities—New Target has got your back.

From threat detection to secure coding practices, we’re here to safeguard your digital presence so you can focus on what really matters: growing your business. Reach out to New Target today and experience peace of mind with a fortified, well-protected website.